The future is going to label your work. The only question is whether you got to choose the label.

Right now, we are still in a rare window of time.

You can still decide how you disclose technology use in your work.

You can still define your own transparency standards.

You can still communicate authorship on your terms, in plain language, without an algorithm acting like it knows your intent better than you do.

That window is not guaranteed to stay open.

Because if you think the big corporate systems and government systems are going to sit back and let creativity stay self-regulated forever, you haven’t been paying attention.

They are already building their frameworks.

They are already standardizing metadata.

They are already designing systems that will “classify” your work.

And when those systems become normal, creators are going to find themselves in a position they never asked for:

A software publisher, platform, or agency will decide what your work is, and how it must be labeled.

CAHDD™ exists for one reason:

To make sure creators get to keep the steering wheel a little longer.

Not by fighting the system.

Not by pretending we can outspend or out-engineer billion-dollar pipelines.

But by doing something the big systems cannot do.

We provide a human-readable language of honesty.

CAHDD™ is not trying to win a standards war

People always ask the wrong question.

“Is CAHDD™ worth adopting if it might not go mainstream?”

“Can CAHDD™ really survive against corporate standards or government-authorship systems?”

Here is the honest answer.

CAHDD™ was never built to compete with big systems.

We are not trying to become the official enforcement authority for creativity.

We are not trying to build the next cryptographic provenance standard.

We are not trying to embed certification software into every camera, platform, and browser.

Frankly, if we tried, we would become exactly what we oppose.

A bureaucracy.

CAHDD™ is old-school by design.

Voluntary.

Visual.

Self-elected.

Non-governed.

No gatekeepers.

No corporate sponsor deciding who’s “allowed” to call themselves transparent.

That is not a weakness.

That is the whole point.

CAHDD™ doesn’t need to defeat anyone.

It needs to outlast.

Big systems track pixels. CAHDD™ tracks intent.

Corporate or government provenance tools are trying to solve machine-level problems:

Was the image altered?

Was AI used?

Was the audio tampered with?

Can we cryptographically verify the origin of this file?

That’s fine.

That has a place, especially for journalism, deepfake prevention, fraud detection, and legal compliance.

But CAHDD™ solves a different problem.

The human-level authorship problem.

Questions like:

How much of this was human work vs. automation?

How much judgment did the creator apply?

Was this created with care, or churned out?

Where is the human fingerprint in the process?

And here is what the big systems will never be able to tell you:

A machine can detect AI usage.

It cannot detect honesty.

It cannot detect taste.

It cannot detect sacrifice.

It cannot detect meaning.

It cannot detect intent.

CAHDD™ exists because humans still deserve credit for being human.

When your work gets labeled by default, your identity becomes negotiable

When corporate provenance systems mature, the labeling will become automatic.

The platforms will decide what is “AI-assisted.”

They will decide what is “human.”

They will decide what is “synthetic.”

They will decide what is “original.”

They will decide what is “derivative.”

And because they need scale, they will oversimplify.

They will flatten the entire creative process into a binary narrative:

AI or Not AI.

That is not reality.

That is convenience.

CAHDD™ exists because the truth is more detailed than a checkbox.

Human creation is not either/or.

It is a spectrum.

It is a pipeline.

It is a set of decisions.

And decisions are where accountability lives.

That is why we stage it.

CAHDD™ works with metadata, not against it

Some people assume CAHDD™ is anti-metadata or anti-standards.

That’s lazy thinking.

If embedded provenance standards become widespread, CAHDD™ becomes even more important, not less.

Because metadata can tell the world:

“AI was used.”

But CAHDD™ can tell the world:

“This is how it was used, and this is how much authorship remained human.”

Metadata can show the machine footprint.

CAHDD™ shows the human fingerprint.

Those two things can coexist beautifully.

But only one of them respects the creator as a thinking being.

CAHDD™ is worth adopting even if it never becomes mainstream

People treat success like only one thing counts:

Did it become the global standard?

That is not the only win condition.

CAHDD™ already wins if it accomplishes any of the following:

First, it creates vocabulary.

If you give people language, you give them power.

Movements don’t need permission once their vocabulary spreads.

Second, it becomes a badge of credibility.

Not everybody wears a badge.

But the ones who do tend to get hired first.

Clients trust people who don’t hide the process.

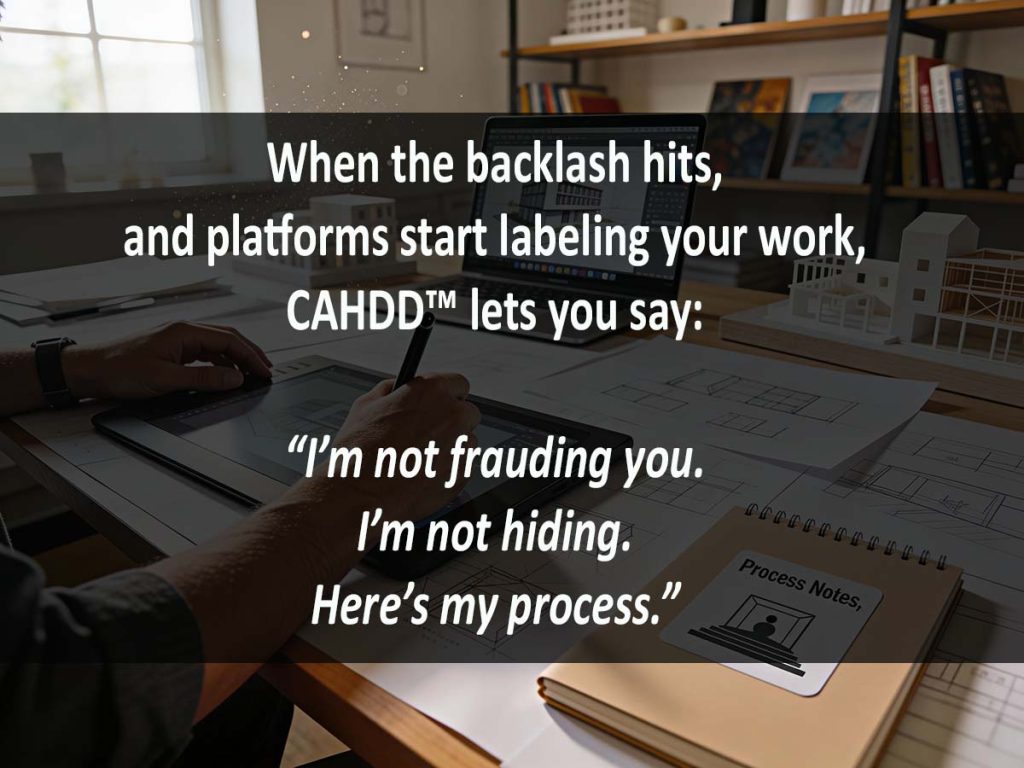

Third, it becomes a shield.

The backlash against automation is coming.

When that wave hits harder, creators will need a way to say:

I’m not faking.

I’m not hiding.

Here’s what I actually did.

CAHDD™ becomes a credibility anchor.

Low-risk.

High-integrity.

You can adopt it in ten minutes.

No logins.

No software.

No corporate permission slip.

Just honesty.

The real threat is not defeat. The real threat is absorption.

Now let’s talk about the ugly part.

CAHDD™ probably won’t get defeated head-to-head.

What’s more likely is something more slippery:

It gets absorbed.

Copied.

Imitated.

Rebranded.

Sterilized.

Bundled into a corporate toolchain.

Then the company smiles and says:

“This was inevitable. The industry is evolving.”

And suddenly the creator-led movement gets rewritten as a platform feature.

With the platform controlling the vocabulary.

Controlling the meaning.

Controlling the rules.

That is the battlefield.

Narrative capture.

So yes, we watch for reuse.

We monitor plagiarism.

We keep receipts.

We document timelines.

Because intellectual theft is not just about money.

It’s about power.

If you steal a movement’s language, you steal the future it was trying to create.

Why you should join CAHDD™ now

This is the moment where creators can still choose.

Not later, when “transparency” becomes mandatory and controlled.

Not later, when it becomes a compliance checkbox.

Not later, when every platform decides what counts and what doesn’t.

Now.

Join CAHDD™ while it is still a voluntary declaration.

A human choice.

A personal standard.

A signature.

Adopt the stages.

Use the icons.

Use the language.

Describe your pipeline.

Because if you do not define your authorship, someone else eventually will.

And when that happens, it won’t be built for your dignity.

It will be built for someone else’s convenience.

CAHDD™ is not trying to beat the system.

CAHDD™ is trying to keep humans visible inside it.

Call to Action

If you’re a creator, designer, engineer, writer, artist, architect, or maker, you can adopt CAHDD™ today.

Start by choosing your CAHDD™ stage for your next piece.

Add the watermark.

Add the disclosure language.

Put your human fingerprint back in the frame.

The movement is open.

The invitation is simple.

Honesty first.

This work reflects a CAHDD Level 2 (U.N.O.) — AI-Assisted Unless Noted Otherwise creative process.

Human authorship: Written and reasoned by Russell L. Thomas (with CAHDD™ editorial oversight). All final decisions and approvals were made by the author.

AI assistance: Tools such as Grammarly, ChatGPT, and PromeAI were used for research support, grammar/refinement, and image generation under human direction.

Images: Unless otherwise captioned, images are AI-generated under human art direction and conform to CAHDD Level 4 (U.N.O.) standards.

Quality control: Reviewed by Russell L. Thomas for accuracy, tone, and context.

Method: Computer Aided Human Designed & Developed (CAHDD™).